資訊專(zhuān)欄INFORMATION COLUMN

摘要:的目的是為了提供一個(gè)目標(biāo)檢測(cè)學(xué)習(xí)的平臺(tái)。注看一下這篇聯(lián)名的機(jī)構(gòu)發(fā)布在熱乎乎的還燙手總結(jié)這個(gè)庫(kù)的目的是為了盡可能介紹的關(guān)于目標(biāo)檢測(cè)相關(guān)的工作。由于還是初學(xué)者,所以整理不好不規(guī)范的地方,還請(qǐng)大家及時(shí)指出。

Object Detection Wiki

Object detection is a computer technology related to computer vision and image processing that deals with detecting instances of semantic objects of a certain class (such as humans, buildings, or cars) in digital images and videos. Well-researched domains of object detection include face detection and pedestrian detection. Object detection has applications in many areas of computer vision, including image retrieval and video surveillance.

Object Detection

首先,Amusi先安利一個(gè)網(wǎng)站,打開(kāi)下述鏈接后,既可以看到令人熱血沸騰的畫(huà)面。

link:

https://handong1587.github.io/deep_learning/2015/10/09/object-detection.html

當(dāng)初看到這個(gè)網(wǎng)址,我很驚訝,鏈接上寫(xiě)的是2015/10/09,我以為是很老的資源,但看到內(nèi)容后,著實(shí)震驚了。該庫(kù)在handong大神的個(gè)人主頁(yè)上,但并沒(méi)有Object Detection多帶帶的github庫(kù)。受此啟發(fā),我擅自(因?yàn)檫€沒(méi)有得到本人同意)將handong大神的Object Detection整理的內(nèi)容進(jìn)行精簡(jiǎn)和補(bǔ)充(實(shí)在班門(mén)弄斧了)。于是創(chuàng)建了一個(gè)名為awesome-object-detection的github庫(kù)。

Awesome-Object-Detection

接下來(lái),重點(diǎn)介紹一下這個(gè)“很copy”的庫(kù)。awesome-object-detection的目的是為了提供一個(gè)目標(biāo)檢測(cè)(Object Detection)學(xué)習(xí)的平臺(tái)。特點(diǎn)是:介紹的paper和的code(盡量更新!)由于Amusi還是初學(xué)者,目前還沒(méi)有辦法對(duì)每個(gè)paper進(jìn)行介紹,但后續(xù)會(huì)推出paper精講的內(nèi)容,也歡迎大家star,fork并pull自己所關(guān)注到object detection的工作。

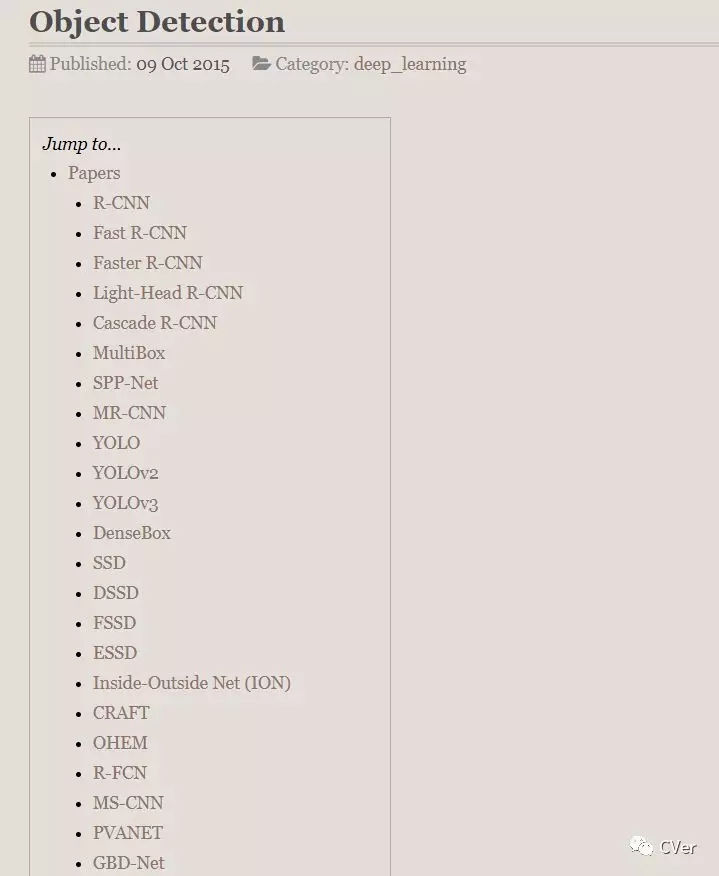

那來(lái)看看目前,awesome-object-detection里有哪些干貨吧~

為了節(jié)省篇幅,這里只介紹較為重要的工作:

R-CNN三件套(R-CNN Fast R-CNN和Faster R-CNN)

Light-Head R-CNN

Cascade R-CNN

YOLO三件套(YOLOv1 YOLOv2 YOLOv3)

SSD(SSD DSSD FSSD ESSD Pelee)

R-FCN

FPN

DSOD

RetinaNet

DetNet

...

大家對(duì)常見(jiàn)的R-CNN系列和YOLO系列一定很熟悉了,這里Amusi也不想重復(fù),因?yàn)轱@得沒(méi)有逼格~這里主要簡(jiǎn)單推薦兩篇paper,來(lái)凸顯一下awesome-object-detection的意義。

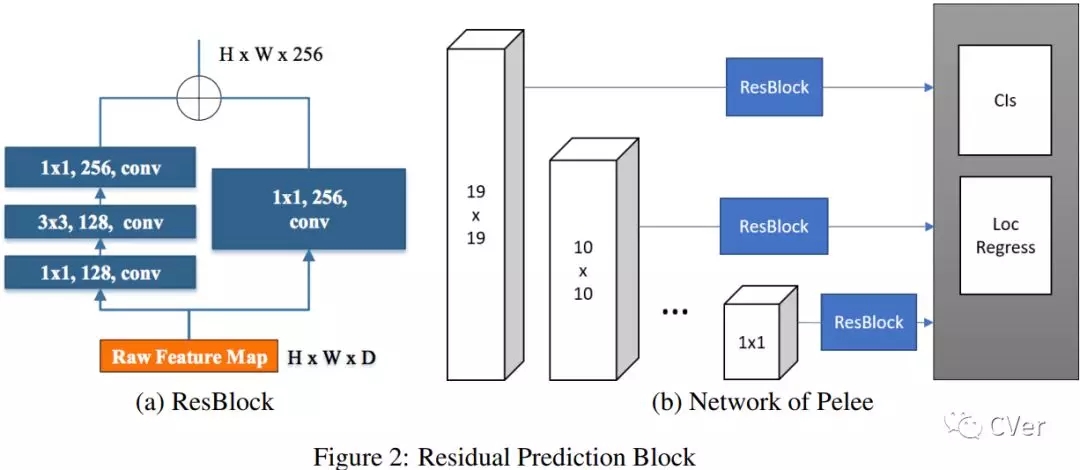

Pelee

《Pelee: A Real-Time Object Detection System on Mobile Devices》

intro: (ICLR 2018 workshop track)

arxiv: https://arxiv.org/abs/1804.06882

github: https://github.com/Robert-JunWang/Pelee

Abstract:An increasing need of running Convolutional Neural Network (CNN) models on mobile devices with limited computing power and memory resource encourages studies on efficient model design. A number of efficient architectures have been proposed in recent years, for example, MobileNet, ShuffleNet, and NASNet-A. However, all these models are heavily dependent on depthwise separable convolution which lacks efficient implementation in most deep learning frameworks. In this study, we propose an efficient architecture named PeleeNet, which is built with conventional convolution instead. On ImageNet ILSVRC 2012 dataset, our proposed PeleeNet achieves a higher accuracy by 0.6% (71.3% vs. 70.7%) and 11% lower computational cost than MobileNet, the state-of-the-art efficient architecture. Meanwhile, PeleeNet is only 66% of the model size of MobileNet. We then propose a real-time object detection system by combining PeleeNet with Single Shot MultiBox Detector (SSD) method and optimizing the architecture for fast speed. Our proposed detection system, named Pelee, achieves 76.4% mAP (mean average precision) on PASCAL VOC2007 and 22.4 mAP on MS COCO dataset at the speed of 17.1 FPS on iPhone 6s and 23.6 FPS on iPhone 8. The result on COCO outperforms YOLOv2 in consideration of a higher precision, 13.6 times lower computational cost and 11.3 times smaller model size. The code and models are open sourced.

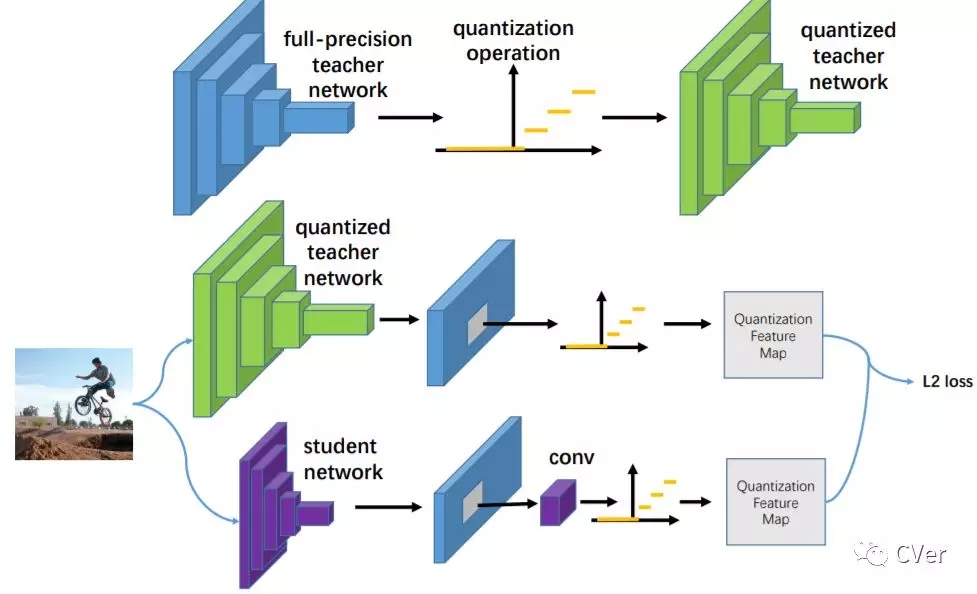

Quantization Mimic

《Quantization Mimic: Towards Very Tiny CNN for Object Detection》

Tsinghua University1 & The Chinese University of Hong Kong2 &SenseTime3

arxiv: https://arxiv.org/abs/1805.02152

注:看一下這篇paper聯(lián)名的機(jī)構(gòu)......2018-05-06發(fā)布在arXiv(熱乎乎的還燙手)

Abstract:In this paper, we propose a simple and general framework for training very tiny CNNs for object detection. Due to limited representation ability, it is challenging to train very tiny networks for complicated tasks like detection. To the best of our knowledge, our method, called Quantization Mimic, is the first one focusing on very tiny networks. We utilize two types of acceleration methods: mimic and quantization. Mimic improves the performance of a student network by transfering knowledge from a teacher network. Quantization converts a full-precision network to a quantized one without large degradation of performance. If the teacher network is quantized, the search scope of the student network will be smaller. Using this property of quantization, we propose Quantization Mimic. It first quantizes the large network, then mimic a quantized small network. We suggest the operation of quantization can help student network to match the feature maps from teacher network. To evaluate the generalization of our hypothesis, we carry out experiments on various popular CNNs including VGG and Resnet, as well as different detection frameworks including Faster R-CNN and R-FCN. Experiments on Pascal VOC and WIDER FACE verify our Quantization Mimic algorithm can be applied on various settings and outperforms state-of-the-art model acceleration methods given limited computing resouces.

總結(jié)

awesome-object-detection這個(gè)庫(kù)的目的是為了盡可能介紹的關(guān)于目標(biāo)檢測(cè)(Object Detection)相關(guān)的工作(paper and code)。由于Amusi還是初學(xué)者,所以整理不好/不規(guī)范的地方,還請(qǐng)大家及時(shí)指出。因?yàn)樵搸?kù)直接copy了handong大神的內(nèi)容,所以如果有版權(quán)侵犯,我會(huì)立即刪除/修改(正在聯(lián)系handong大神ing)。

歡迎加入本站公開(kāi)興趣群商業(yè)智能與數(shù)據(jù)分析群

興趣范圍包括各種讓數(shù)據(jù)產(chǎn)生價(jià)值的辦法,實(shí)際應(yīng)用案例分享與討論,分析工具,ETL工具,數(shù)據(jù)倉(cāng)庫(kù),數(shù)據(jù)挖掘工具,報(bào)表系統(tǒng)等全方位知識(shí)

QQ群:81035754

文章版權(quán)歸作者所有,未經(jīng)允許請(qǐng)勿轉(zhuǎn)載,若此文章存在違規(guī)行為,您可以聯(lián)系管理員刪除。

轉(zhuǎn)載請(qǐng)注明本文地址:http://specialneedsforspecialkids.com/yun/4757.html

摘要:基于候選區(qū)域的目標(biāo)檢測(cè)器滑動(dòng)窗口檢測(cè)器自從獲得挑戰(zhàn)賽冠軍后,用進(jìn)行分類(lèi)成為主流。一種用于目標(biāo)檢測(cè)的暴力方法是從左到右從上到下滑動(dòng)窗口,利用分類(lèi)識(shí)別目標(biāo)。這些錨點(diǎn)是精心挑選的,因此它們是多樣的,且覆蓋具有不同比例和寬高比的現(xiàn)實(shí)目標(biāo)。 目標(biāo)檢測(cè)是很多計(jì)算機(jī)視覺(jué)任務(wù)的基礎(chǔ),不論我們需要實(shí)現(xiàn)圖像與文字的交互還是需要識(shí)別精細(xì)類(lèi)別,它都提供了可靠的信息。本文對(duì)目標(biāo)檢測(cè)進(jìn)行了整體回顧,第一部分從RCNN...

摘要:昨天,研究院開(kāi)源了,業(yè)內(nèi)較佳水平的目標(biāo)檢測(cè)平臺(tái)。項(xiàng)目地址是實(shí)現(xiàn)頂尖目標(biāo)檢測(cè)算法包括的軟件系統(tǒng)。因此基本上已經(jīng)是最目前包含最全與最多目標(biāo)檢測(cè)算法的代碼庫(kù)了。 昨天,F(xiàn)acebook AI 研究院(FAIR)開(kāi)源了 Detectron,業(yè)內(nèi)較佳水平的目標(biāo)檢測(cè)平臺(tái)。據(jù)介紹,該項(xiàng)目自 2016 年 7 月啟動(dòng),構(gòu)建于 Caffe2 之上,目前支持大量機(jī)器學(xué)習(xí)算法,其中包括 Mask R-CNN(何愷...

摘要:值得一提的是每篇文章都是我用心整理的,編者一貫堅(jiān)持使用通俗形象的語(yǔ)言給我的讀者朋友們講解機(jī)器學(xué)習(xí)深度學(xué)習(xí)的各個(gè)知識(shí)點(diǎn)。今天,紅色石頭特此將以前所有的原創(chuàng)文章整理出來(lái),組成一個(gè)比較合理完整的機(jī)器學(xué)習(xí)深度學(xué)習(xí)的學(xué)習(xí)路線圖,希望能夠幫助到大家。 一年多來(lái),公眾號(hào)【AI有道】已經(jīng)發(fā)布了 140+ 的原創(chuàng)文章了。內(nèi)容涉及林軒田機(jī)器學(xué)習(xí)課程筆記、吳恩達(dá) deeplearning.ai 課程筆記、機(jī)...

摘要:近日,外媒刊登了一篇機(jī)器學(xué)習(xí)與網(wǎng)絡(luò)安全相關(guān)的資料大匯總,文中列出了相關(guān)數(shù)據(jù)源的獲取途徑,優(yōu)秀的論文和書(shū)籍,以及豐富的教程。這個(gè)視頻介紹了如何將機(jī)器學(xué)習(xí)應(yīng)用于網(wǎng)絡(luò)安全探測(cè),時(shí)長(zhǎng)約小時(shí)。 近日,外媒 KDnuggets 刊登了一篇機(jī)器學(xué)習(xí)與網(wǎng)絡(luò)安全相關(guān)的資料大匯總,文中列出了相關(guān)數(shù)據(jù)源的獲取途徑,優(yōu)秀的論文和書(shū)籍,以及豐富的教程。大部分都是作者在日常工作和學(xué)習(xí)中親自使用并認(rèn)為值得安利的純干貨。數(shù)...

閱讀 2000·2021-09-13 10:23

閱讀 2332·2021-09-02 09:47

閱讀 3792·2021-08-16 11:01

閱讀 1214·2021-07-25 21:37

閱讀 1597·2019-08-30 15:56

閱讀 521·2019-08-30 13:52

閱讀 3127·2019-08-26 10:17

閱讀 2442·2019-08-23 18:17